Introduction:

In today’s data-driven world, the role of a Data Engineer has become increasingly important. Data Engineers are responsible for managing and transforming vast amounts of data to enable effective analysis and decision-making within organizations.

If you aspire to become a Data Engineer, this article will provide you with a comprehensive roadmap to guide you through the necessary skills and tools needed to succeed in this field.

Understanding the Role of a Data Engineer:

To comprehend the role of a Data Engineer, let’s consider an example. Baskin Robbins, a popular ice cream outlet, operates multiple stores across different cities. Each store generates data on customers, daily sales, popular flavors, and stock management. This data needs to be analyzed and interpreted to make informed business decisions.

However, the data is often structured and unstructured, stored in various formats, such as tables, surveys, feedback forms, and more. It is the responsibility of a Data Engineer to collect, sort, and deliver this data to Data Analysts and Data Scientists, ensuring they have access to clean, organized, and reliable data for analysis and decision-making.

Technical Responsibilities of a Data Engineer:

At a technical level, a Data Engineer’s primary role is to design and create systems capable of extracting, transforming, and loading (ETL) large-scale data efficiently. Here are the key responsibilities of a Data Engineer:

1. Programming Language:

To embark on your journey as a Data Engineer, you need to have a solid foundation in a programming language. The three main options for programming languages in the field of data are Java, Scala, and Python. Python is recommended for beginners due to its simplicity and extensive libraries that aid in data engineering tasks.

Familiarize yourself with the basics of Python, including variables, data types, operators, loops, conditional statements, functions, and data structures. Pay special attention to libraries like NumPy, Matplotlib, and Pandas, which are widely used in data manipulation.

2. Fundamentals of Computing:

Understanding the fundamentals of computing is crucial for a Data Engineer. It includes working in a Linux environment, shell scripting, managing the environment, working with APIs, and knowledge of web scraping.

The Request library in Python can be used to interact with APIs and extract data. Additionally, learn about version control using platforms like GitHub, which is essential for collaboration and managing code changes when working with a team.

3. Databases and DBMS:

A strong understanding of databases and database management systems (DBMS) is essential for a Data Engineer. Gain knowledge of SQL (Structured Query Language) and either SQL or NoSQL databases. SQL databases such as PostgreSQL and MySQL are used for transactional data, while NoSQL databases like MongoDB and Cassandra excel in unstructured data and high scalability.

Learn advanced concepts like joins, subqueries, normalization, and denormalization to manipulate and retrieve data efficiently.

4. Cloud Computing:

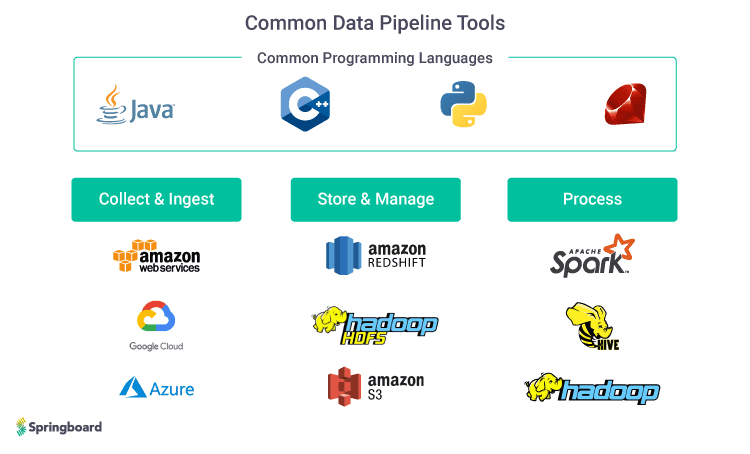

Cloud computing is integral to modern data engineering practices. Working with large-scale data requires the power and flexibility offered by cloud platforms. Two prominent cloud platforms are Amazon Web Services (AWS) and Google Cloud Platform (GCP).

Start by choosing one of these platforms and familiarize yourself with its services, such as data storage, computing resources, and data processing tools. Both AWS and GCP provide a wide range of services that can be leveraged in data eng projects.

5. Processing and Warehousing of Data:

Data processing involves handling two types of data: batch data and streaming data. Batch data refers to accumulated data over a specific period, while streaming data refers to real-time or near-real-time data. Apache Spark is a popular tool used for both batch and streaming data processing.

Gain proficiency in Spark’s architecture, execution model, and data manipulation using Spark SQL and data frames. Furthermore, ETL (Extract, Transform, Load) processes are a vital part of data engineering, and understanding data warehousing concepts and tools like Apache Hive and Snowflake will enhance your skills in this area.

6. Workflow Schedule:

Workflow scheduling involves automating and managing data processing pipelines. Apache Airflow is a powerful tool used for scheduling and orchestrating workflows in data engineering projects.

Learning Airflow will enable you to create and manage complex data pipelines, ensuring timely data delivery and coordination between different stages of the data engineering process.

Optional Skills:

Once you have acquired expertise in the core areas of data engineering, you may consider expanding your knowledge into additional optional skills. These skills can enhance your capabilities and make you stand out in the job market. Some optional skills include:

Visualization Tools:

Power BI and Tableau are popular visualization tools used to create interactive and visually appealing dashboards. Learning these tools can help you present data insights effectively.

Containers:

Knowledge of containerization technologies such as Docker and Kubernetes can be beneficial, as they facilitate the deployment and management of applications and services.

Conclusion:

Becoming a Data Engineer requires a diverse skill set and a solid understanding of various tools and technologies. By following this roadmap, you can build a strong foundation and gain expertise in programming languages, computing fundamentals, databases, cloud computing, data processing, and workflow scheduling. Continuous learning and staying updated with emerging technologies will ensure you thrive in this field.

As a Data Engineer, you will play a vital role in enabling data-driven decision-making and contribute to the success of organizations in today’s data-driven world.

Remember, the demand for skilled Data Engineers is high, and the salary range for this role can vary . Therefore, invest in upskilling yourself and embrace the opportunities that lie ahead. Best of luck on your journey to becoming a successful Data Engineer!

Note:

The information provided in this article is based on the current industry trends and practices as of 2024. It is essential to stay updated with the latest developments in the field of data engineering to ensure continued professional growth.

5 Comments

Zesshan · 15 October 2023 at 21:58

Thanks, sir for providing such amazing content.

Muhammad Zubair · 17 October 2023 at 10:21

Thanks for providing this type of metrails.

Muhammad ahmed Hassan · 20 October 2023 at 19:10

Nice

Efficient Data Pipelines: ETL with Apache Airflow project 7 · 12 October 2023 at 15:54

[…] Ultimate Data Engineer Roadmap (2023 Edition) | Master… […]

2024 Big Data Engineer Career Guide: Your Roadmap to Success · 12 November 2023 at 13:41

[…] Ultimate Data Engineer Roadmap (2023 Edition) | Master the Path to a Lucrative Data Engineering Care… […]